In my coaching of Agile Testing I always tell testers to "Start with the When". For those familiar with Gherkin Scripting you might catch what I'm talking about. Gherkin is known as Given-When-Then format where Given is the background, When is the action being tested, Then is the results. For example:

Given I have a magic wand

When I point it at you and wave it

Then you will turn into a frog

The key to this script is the When. It is the action which the business is paying you to build/test. By focusing on the When everyone quickly gains an understanding of what is to be being built & tested.

Doing the action will result in the Then. Cause->Effect; Action->Result; When->Then.

You are now ready to figure out all that's needed to get to the When. This is the Given. What test data is needed? Where to do you need to navigate to? Do you need test ID's? etc.. If you understand your When & Then steps the Given should fall into place.

GoofyTesterGuy

Adding a goofy point-of-view to the software testing world

Tuesday, July 8, 2014

Tuesday, June 24, 2014

Testing Credentials

Every so often I get into discussions with testers about the value of certifications. While I'm all for additional education and gaining a better understanding of your craft, I always leave the discussions empty. Developers have Software Engineering degrees and sometimes even advanced degrees; product owners often have degrees in their profession and many also have MBA's or other advanced degrees. What do testers have? Usually not much. I have come across many testers who are career changers; myself included (I was a city planner for 7 years before moving into the software development field). Many testers are BA's or Developers who "backed into" testing. During a project they were asked to also do testing because there were no testers on the project. They did a good job and kept being asked. After a while they found they enjoyed testing more than their regular work. So they began seeking the work out.

While the stories have happy endings (people stay in testing because they enjoy it) it does create a problem: lack of confidence due to a lack of education. Example: a developer (with an advanced degree and years of experience) is saying you need to test less versus a tester who knows what they are doing but used to be a school teacher who got laid off and felt she needed a career change. Who is most likely going to win the argument? Hint: I have yet to meet a developer who was less that 100% sure they are right. They have the degrees, they have the experience, of course they're right.

Discussions with business owners usually end up much the same. They will ignore your discussions about risk because they know what's best for the business. After all, they have the degrees, they have the experience, of course they're right.

This leaves testers caught between a rock and a hard place when trying to make a point.

Confidence starts with a strong belief that what you are doing brings value to the team. I don't think anyone questions any longer the value of testing. What is being questioned is usually what type and how much. Conferences and (on a smaller scale) local User Groups can help reinforce and validate what you are doing. These are great places to learn the tricks of the trade and see that other people are facing the same roadblocks you are. More importantly, they are great places to build your confidence and talk to other testers who also believe that what you are doing and how you are doing it is correct.

Certifications are another great step. While its not quite as powerful as a BA in Computer Science or an MBA, a CSTE Certification (for example) does tell the world that you know the basics of software testing. You might also learn a thing or two.

The final piece to the Testers Credentials puzzle is on-going advanced training courses. This education provides great opportunities to learn more about your trade and gain confidence in what you are doing. It might be a 2 day "Intro to ruby/cucumber" training course or a 3 day "Becoming an agile tester" course. Here you will learn more about the discipline you are practicing. And maybe even pick-up and trick or two on how to deal with Developers.

While the stories have happy endings (people stay in testing because they enjoy it) it does create a problem: lack of confidence due to a lack of education. Example: a developer (with an advanced degree and years of experience) is saying you need to test less versus a tester who knows what they are doing but used to be a school teacher who got laid off and felt she needed a career change. Who is most likely going to win the argument? Hint: I have yet to meet a developer who was less that 100% sure they are right. They have the degrees, they have the experience, of course they're right.

Discussions with business owners usually end up much the same. They will ignore your discussions about risk because they know what's best for the business. After all, they have the degrees, they have the experience, of course they're right.

This leaves testers caught between a rock and a hard place when trying to make a point.

Confidence starts with a strong belief that what you are doing brings value to the team. I don't think anyone questions any longer the value of testing. What is being questioned is usually what type and how much. Conferences and (on a smaller scale) local User Groups can help reinforce and validate what you are doing. These are great places to learn the tricks of the trade and see that other people are facing the same roadblocks you are. More importantly, they are great places to build your confidence and talk to other testers who also believe that what you are doing and how you are doing it is correct.

Certifications are another great step. While its not quite as powerful as a BA in Computer Science or an MBA, a CSTE Certification (for example) does tell the world that you know the basics of software testing. You might also learn a thing or two.

The final piece to the Testers Credentials puzzle is on-going advanced training courses. This education provides great opportunities to learn more about your trade and gain confidence in what you are doing. It might be a 2 day "Intro to ruby/cucumber" training course or a 3 day "Becoming an agile tester" course. Here you will learn more about the discipline you are practicing. And maybe even pick-up and trick or two on how to deal with Developers.

Thursday, June 19, 2014

Testers in Agile?

I recently saw a shop which was "agile", yet it didn't have any testers. It was 3 developers, 1 Analyst and a ScrumMaster. The developers did TDD and created a ruby/cucumber framework to automate the GUI testing layer. Being a tester I was quite shocked by this arrangement. Agile is a test-driven approach. How could you claim to be "agile" yet have not testers?

What shocked me most was their reason why they had no testers: "The Agile Manifesto said so". Huh? Did I miss the line in the Agile Manifesto which states that we value "people who know nothing about testing over professional testers"? What they meant to say was that there are 12 Principles behind the Agile Manifesto. The ones they keyed in on were:

It says nothing about the role of testers. In fact, if you read through the Manifesto and its Principles, there is nothing, NOTHING, about testing. This particular group was trying to be as "pure" agile as they thought they could. And since there was no mention of testers in the Principles, they had none. To their credit defects were down significantly from their waterfall days and releases were going in monthly and were significantly more smooth than before (they used to be on a 4X/year schedule that always turned into a 3X/year schedule because there was so much clean-up from each release).

This really got me thinking. Was testing a soon-to-be-extinct career along with Project Managers?

Fortunately my career concern was short lived as this testerless groups velocity had peaked and was now on the rapid decline. When analyzed, the reason for this was ever-increasing re-work due to defects and Testing Technical debt (Quadrant 4 tests, for example, were not being executed). Having some new agile coaches they began to realize testing was a full-time effort. And since developers need to develop and not test, they needed at least 1, possibly 2 testers on each team to "guide" the testing effort.

What shocked me most was their reason why they had no testers: "The Agile Manifesto said so". Huh? Did I miss the line in the Agile Manifesto which states that we value "people who know nothing about testing over professional testers"? What they meant to say was that there are 12 Principles behind the Agile Manifesto. The ones they keyed in on were:

Business people and developers must work

together daily throughout the project.

Agile processes promote sustainable development.

The sponsors, developers, and users should be able

to maintain a constant pace indefinitely.

It says nothing about the role of testers. In fact, if you read through the Manifesto and its Principles, there is nothing, NOTHING, about testing. This particular group was trying to be as "pure" agile as they thought they could. And since there was no mention of testers in the Principles, they had none. To their credit defects were down significantly from their waterfall days and releases were going in monthly and were significantly more smooth than before (they used to be on a 4X/year schedule that always turned into a 3X/year schedule because there was so much clean-up from each release).

This really got me thinking. Was testing a soon-to-be-extinct career along with Project Managers?

Fortunately my career concern was short lived as this testerless groups velocity had peaked and was now on the rapid decline. When analyzed, the reason for this was ever-increasing re-work due to defects and Testing Technical debt (Quadrant 4 tests, for example, were not being executed). Having some new agile coaches they began to realize testing was a full-time effort. And since developers need to develop and not test, they needed at least 1, possibly 2 testers on each team to "guide" the testing effort.

My point in all of this is that sometimes you need to read between the lines and understand the spirit of what is being said rather than the literal. Yes, testers are not mentioned. But neither are Analysts or ScrumMasters or agile coaches. Yet each is critical to the success of an agile team. The spirit of "Business people and developers must work together.." is that this is a team effort. And teams need to work together to finish the project.

Tuesday, June 17, 2014

A better way of handing Defects

For years I viewed defects as fights because that is usually what they turned into. And I hated it. Developers view defects as an assault on their reputation. Every defect opened is testings way of saying "you're not perfect." So developers took it personally and came into defect meetings with guns loaded. More show-stopper defects or the greater the volume of defects usually meant the bigger the developers guns became. As the "Quality Gatekeepers" of the project Testers needed to bring in just as much ammo because it was our perceived job to ensure these defects were resolved. Numerous grenades were thrown and numerous defects went into production.

Then I began working on agile projects. At first I was shocked by the lack of defects; both in the low number of defects coming out of development and the fact that none were logged. Being new to agile I decided to roll with this and see how it played out. To my amazement, it played out just like many agilistas said it would: working code was delivered faster. They were quick to point out that it took longer for me to log and manage the defect than it did for me to have a conversation with the developer and get it fixed. I actually timed this at first to check. To my amazement, it was true. And it wasn't a fluke. It happened again and again. There weren't many defects. But when there was they played out just as the agilistas expected.

The other thing that was amazing to me was how fast we were able to find the problem. This was due to the test coverage on all layers (Unit, Integration, GUI). Defects aren't about just finding a problem. Defects are about finding where the problem is. And this testing stack did a great job of layering and finding precisely where the problem was. 1 or 2 new Unit Tests, an Integration test and a GUI test later and we had working code.

With less of a need to focus on what is broken or where, I would like to propose a different approach. When a defect arises, in waterfall or agile or any other SDLC, get it fixed. But focus your questions and research more on the process which caused the defect to be created in the first place. If the root cause was a requirement miss, what can you do to prevent requirement misses in the future? Is there a coding miss? What can you (or dev) do to prevent coding misses in the future?

This approach worked wonders for me. My team was able to find that test cases were not being fully flushed out for higher risk features. By bringing the entire team to the Amigos we were able to better determine risk levels and better flush out test cases. And defects all but went away.

Then I began working on agile projects. At first I was shocked by the lack of defects; both in the low number of defects coming out of development and the fact that none were logged. Being new to agile I decided to roll with this and see how it played out. To my amazement, it played out just like many agilistas said it would: working code was delivered faster. They were quick to point out that it took longer for me to log and manage the defect than it did for me to have a conversation with the developer and get it fixed. I actually timed this at first to check. To my amazement, it was true. And it wasn't a fluke. It happened again and again. There weren't many defects. But when there was they played out just as the agilistas expected.

The other thing that was amazing to me was how fast we were able to find the problem. This was due to the test coverage on all layers (Unit, Integration, GUI). Defects aren't about just finding a problem. Defects are about finding where the problem is. And this testing stack did a great job of layering and finding precisely where the problem was. 1 or 2 new Unit Tests, an Integration test and a GUI test later and we had working code.

With less of a need to focus on what is broken or where, I would like to propose a different approach. When a defect arises, in waterfall or agile or any other SDLC, get it fixed. But focus your questions and research more on the process which caused the defect to be created in the first place. If the root cause was a requirement miss, what can you do to prevent requirement misses in the future? Is there a coding miss? What can you (or dev) do to prevent coding misses in the future?

This approach worked wonders for me. My team was able to find that test cases were not being fully flushed out for higher risk features. By bringing the entire team to the Amigos we were able to better determine risk levels and better flush out test cases. And defects all but went away.

Focus on the why, not the what...

Friday, June 13, 2014

The Agile Manifesto vs. The German Beer Purity Law

I have lost count how many times I have heard people say "..but we're not pure agile" "They're not agile because they do..." This blog was sparked by such statements and it got me thinking: is there a "pure" agile? Does putting up an agile board automatically make you agile? If you don't have a ScrumMaster are you agile? I was once in a shop who's pilot agile team had no testers because they were "pure agile" and "there are not testers in agile."

I approached answering this question as a tester would by asking what would a minimal agile shop look like? What would an ideal agile shop look like? And if yours falls somewhere in between then you are, to some degree, agile. When put in this context every shop is agile to varying degrees. Some do it well, some don't; some have dedicated agile coaches, other don't; some have professional tester who know what they are doing, some have developers testing. It's all agile.

But my true epiphany answer came at a bar. Hard to believe, huh? I was having some fancy German beer that stated on the bottle something to effect of "brewed under the German Beer Purity Law." I am proud to say that I knew what it meant. The German Beer Purity Law (Reinheitsgebot) of 1487 states:

In Germany this is a big deal. Truth be told, it is the law for most beers brewed in Germany. But does that make Reinheitsgebot-adhering beers better? No, of course not. It just means that those brewed with ingredients not on the list don't get the Reinheitsgebot seal of approval. But they are still beer.

As I got to thinking about the Reinheitsgebot it made me think about how many different varieties of beer this bar served and how few probably adhered to the Reinheitsgebot. Just like the software development world and the Agile Manifesto. There are numerous shops which are agile to one degree or another. The percentage which are "pure agile" (defined as strictly adhering to the Agile Manifesto an its Principles) is probably <5%; just as the beers at this bar were probably <5% Reinheitsgebot-adherent. But that is not a bad thing. It simply means each shop is at a different stage of agile evolution. Some are not as evolved, some are much more evolved, some are in the middle; but all can call themselves "agile".

Both the Reinheitsgebot and the Agile Manifesto serve as a purity beacon. And both are not meant to be absolute. They are guidelines. Nothing more, nothing less. We are humans and it is our nature to be creative, innovative, and do what we are told we are not allowed to do. If I have no malt, but an abundance of wheat, I'm using wheat instead. Reinheitsgebot be damned. Does that make my beer any less of a beer? In Germany, maybe. But everywhere else in the world no. My beer is still beer and my software development style is still agile.

I approached answering this question as a tester would by asking what would a minimal agile shop look like? What would an ideal agile shop look like? And if yours falls somewhere in between then you are, to some degree, agile. When put in this context every shop is agile to varying degrees. Some do it well, some don't; some have dedicated agile coaches, other don't; some have professional tester who know what they are doing, some have developers testing. It's all agile.

But my true epiphany answer came at a bar. Hard to believe, huh? I was having some fancy German beer that stated on the bottle something to effect of "brewed under the German Beer Purity Law." I am proud to say that I knew what it meant. The German Beer Purity Law (Reinheitsgebot) of 1487 states:

beer can only be brewed using water, malt and hops.

As I got to thinking about the Reinheitsgebot it made me think about how many different varieties of beer this bar served and how few probably adhered to the Reinheitsgebot. Just like the software development world and the Agile Manifesto. There are numerous shops which are agile to one degree or another. The percentage which are "pure agile" (defined as strictly adhering to the Agile Manifesto an its Principles) is probably <5%; just as the beers at this bar were probably <5% Reinheitsgebot-adherent. But that is not a bad thing. It simply means each shop is at a different stage of agile evolution. Some are not as evolved, some are much more evolved, some are in the middle; but all can call themselves "agile".

Both the Reinheitsgebot and the Agile Manifesto serve as a purity beacon. And both are not meant to be absolute. They are guidelines. Nothing more, nothing less. We are humans and it is our nature to be creative, innovative, and do what we are told we are not allowed to do. If I have no malt, but an abundance of wheat, I'm using wheat instead. Reinheitsgebot be damned. Does that make my beer any less of a beer? In Germany, maybe. But everywhere else in the world no. My beer is still beer and my software development style is still agile.

Thursday, June 12, 2014

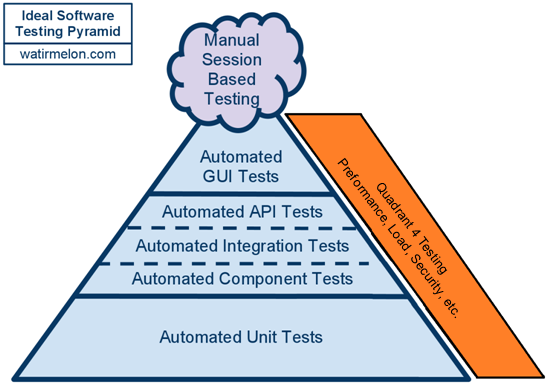

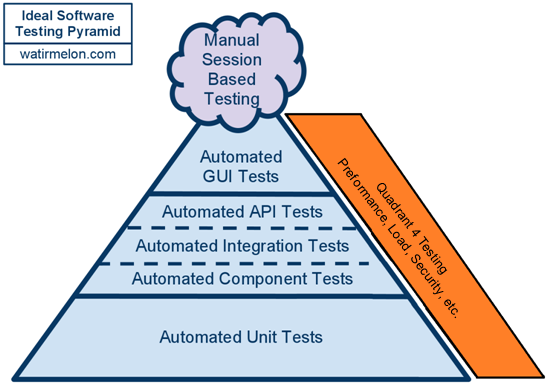

Expanding the test triangle

There is a saying: Never ask a question you don't know the answer to. I'll take that a step further and say: Never ask a question you don't want to hear the answer to. So I have to chuckle internally when I am asked the question: "What do I need to test?" because no one really wants to hear my answer: "As the test engineer you are responsible for the entire stack of testing. You don't have to do the entire stack yourself. Developers can help; specialists can help; the business can help; you can do some of the testing yourself. It doesn't matter who does it. But you are responsible to make sure all layers of testing get done."

Typical response: "Wait. How do developers help with my testing? And did you say the business? I thought testing was only about making sure the requirements were built and the system still works."

Over the years I too have struggled with the question of what to test. Product Risk Analysis (PRA's) have helped me considerably to focus in on those areas of highest risk. Every discussion I have about what to test has a discussion about PRA's in it somewhere. But my real ah-ha moment came when reading Succeeding With Agile by Mike Cohn. Mike laid out the automation testing triangle; which spoke of 3 layers of tests:

But there was something missing. During sprints I am often asked "When do we do Performance Tests?" Being the anti-technical debt person I am I always answered "by the end of the sprint." While this answer worked it didn't satisfy me much because it was a test, an automated test even, but didn't fit in the pyramid. The tests in question fall into Quadrant 4 of Brian Maricks Testing Quadrants. All are technology-facing tests which critique the product and are almost always automated. They include: security testing, performance & load testing, data migration testing, "ility" tests (scalability, maintainability, reliability, installability, compatibility, etc.). But what to do with them?

The solution I came up with is the addition of Q4 Tests to the side of the testing pyramid. This simple addition now provides a home on the pyramid for every type of test created.

The solution I came up with is the addition of Q4 Tests to the side of the testing pyramid. This simple addition now provides a home on the pyramid for every type of test created.

My new testing pyramid also shows testers that you can do Q4 tests at any level of the pyramid. For example, you do not have to wait until the end of the Sprint to do all of your Performance testing. You can begin by tracking execution times for each Unit, Integration and GUI test. This will give you a first insight into performance; you could also have the developers run "best practice" evaluation tools against their code to help determine maintainability, etc.; you can certainly load test various components and the GUI as functionality is added.

I now share this updated pyramid every shop I go into.

Typical response: "Wait. How do developers help with my testing? And did you say the business? I thought testing was only about making sure the requirements were built and the system still works."

Over the years I too have struggled with the question of what to test. Product Risk Analysis (PRA's) have helped me considerably to focus in on those areas of highest risk. Every discussion I have about what to test has a discussion about PRA's in it somewhere. But my real ah-ha moment came when reading Succeeding With Agile by Mike Cohn. Mike laid out the automation testing triangle; which spoke of 3 layers of tests:

- Automated GUI Tests

- Automated Integration Tests

- Automated Unit Tests

But there was something missing. During sprints I am often asked "When do we do Performance Tests?" Being the anti-technical debt person I am I always answered "by the end of the sprint." While this answer worked it didn't satisfy me much because it was a test, an automated test even, but didn't fit in the pyramid. The tests in question fall into Quadrant 4 of Brian Maricks Testing Quadrants. All are technology-facing tests which critique the product and are almost always automated. They include: security testing, performance & load testing, data migration testing, "ility" tests (scalability, maintainability, reliability, installability, compatibility, etc.). But what to do with them?

The solution I came up with is the addition of Q4 Tests to the side of the testing pyramid. This simple addition now provides a home on the pyramid for every type of test created.

The solution I came up with is the addition of Q4 Tests to the side of the testing pyramid. This simple addition now provides a home on the pyramid for every type of test created.My new testing pyramid also shows testers that you can do Q4 tests at any level of the pyramid. For example, you do not have to wait until the end of the Sprint to do all of your Performance testing. You can begin by tracking execution times for each Unit, Integration and GUI test. This will give you a first insight into performance; you could also have the developers run "best practice" evaluation tools against their code to help determine maintainability, etc.; you can certainly load test various components and the GUI as functionality is added.

I now share this updated pyramid every shop I go into.

Tuesday, June 10, 2014

The dillusion of Polyskilling

Quick question: have you had surgery lately? Did you do it yourself or did you have someone else do it?

Whenever I ask this question I get a lot of chuckles. Of course you didn't do it yourself. Nobody does their own surgery. But why not? There are lots of reasons why not. The answers I get usually boil down to 3 things:

Software development can be a lot like doing surgery needing highly experienced, highly trained people. Have you ever tried to build an e-commerce web portal with millions of items for sale globally? it's pretty complex. But some aspects of software development can also be like fixing your car or mowing your lawn: best if done by professionals but with some minimal training, experience, and the right tools anyone can do it. I do a lot of ruby/cucumber training. Within 15 minutes I can teach anyone how to write a Gherkin script. Given an hour I can even have them writing pretty good Gherkin scripts. They are fairly simplistic.

Where I see many companies failing is when they assume everything is as simple as writing a Gherkin script, when in fact most tasks are much more like surgery and require a highly trained, highly experienced doctor. I call this polyskilling: the delusion that anyone on a team can do everyone else's tasks with little to no training.

As a tester I am directly in the crosshairs of this belief since anyone can write a Gherkin script. And testing is only about writing and executing scripts, right? WRONG!!!!

I am not going to be so bold and state that Testing is on par with Development; with both being as difficult as brain surgery. But I would go so far to say it is at least as difficult as working on a car. You might not have to know the details of the surgery, but as a tester you MUST have a good understanding of what is going on so when something does go wrong you can point it out. This takes some level of technical understanding about the app being built, and it takes some level of understanding of how to test.

This is where polyskilling can kill a team. Without training, coaching, years of experience, and in some cases even specialized testing tools, defects will slip through the cracks. You can't just throw a Gherkin script at a problem and hope it catches everything that could potentially go wrong. A good tester understands risk and builds their testing stack accordingly; a good tester asks the developers the right questions about the system under test to determine where the tests are needed; a good tester can look at a system and know the best tools to pull out of their tool belt to test the system. And this knowledge is something only an experienced tester can bring to the table.

Whenever I ask this question I get a lot of chuckles. Of course you didn't do it yourself. Nobody does their own surgery. But why not? There are lots of reasons why not. The answers I get usually boil down to 3 things:

- Education - a doctor has over 10 years of education on how to do the surgery. You have none.

- Experience - a doctor hopefully has done 100's of these types of surgeries. You have done zero.

- Tools - a doctor has a well-equipped office or hospital operating room with all the latest tools. You have none of these in your garage or basement.

Software development can be a lot like doing surgery needing highly experienced, highly trained people. Have you ever tried to build an e-commerce web portal with millions of items for sale globally? it's pretty complex. But some aspects of software development can also be like fixing your car or mowing your lawn: best if done by professionals but with some minimal training, experience, and the right tools anyone can do it. I do a lot of ruby/cucumber training. Within 15 minutes I can teach anyone how to write a Gherkin script. Given an hour I can even have them writing pretty good Gherkin scripts. They are fairly simplistic.

Where I see many companies failing is when they assume everything is as simple as writing a Gherkin script, when in fact most tasks are much more like surgery and require a highly trained, highly experienced doctor. I call this polyskilling: the delusion that anyone on a team can do everyone else's tasks with little to no training.

As a tester I am directly in the crosshairs of this belief since anyone can write a Gherkin script. And testing is only about writing and executing scripts, right? WRONG!!!!

I am not going to be so bold and state that Testing is on par with Development; with both being as difficult as brain surgery. But I would go so far to say it is at least as difficult as working on a car. You might not have to know the details of the surgery, but as a tester you MUST have a good understanding of what is going on so when something does go wrong you can point it out. This takes some level of technical understanding about the app being built, and it takes some level of understanding of how to test.

This is where polyskilling can kill a team. Without training, coaching, years of experience, and in some cases even specialized testing tools, defects will slip through the cracks. You can't just throw a Gherkin script at a problem and hope it catches everything that could potentially go wrong. A good tester understands risk and builds their testing stack accordingly; a good tester asks the developers the right questions about the system under test to determine where the tests are needed; a good tester can look at a system and know the best tools to pull out of their tool belt to test the system. And this knowledge is something only an experienced tester can bring to the table.

Subscribe to:

Posts (Atom)